Neuromorphic Computing: The Brain-Inspired Future of Tech

In a world where traditional computing struggles to keep pace with our insatiable appetite for data processing, a new paradigm is emerging. Neuromorphic computing, inspired by the intricate workings of the human brain, promises to revolutionize how we approach artificial intelligence, data analysis, and even our understanding of cognition itself. This cutting-edge technology aims to bridge the gap between silicon and synapses, potentially unlocking unprecedented levels of efficiency and capability in our devices.

For decades, this concept remained largely theoretical. Traditional von Neumann architecture, with its separate processing and memory units, dominated the computing landscape. However, as we’ve pushed closer to the physical limits of Moore’s Law, researchers and tech companies have begun to explore alternative computing paradigms more seriously.

How Neuromorphic Systems Work

At its core, neuromorphic computing attempts to replicate the brain’s neural structure and function using specialized hardware. Unlike traditional computers that rely on binary operations, neuromorphic systems use artificial neurons and synapses to process information in a more parallel and event-driven manner.

These systems typically consist of large arrays of neuron-like elements interconnected by artificial synapses. When an input signal arrives, it triggers a cascade of neuron activations, mimicking the way information flows through our brains. This approach allows for more efficient processing of certain types of tasks, particularly those involving pattern recognition and sensory processing.

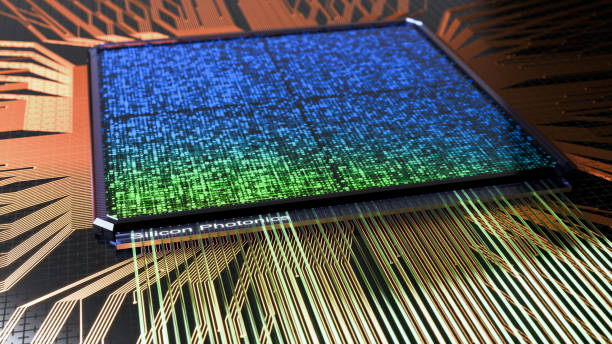

The Hardware Behind the Magic

Several approaches to neuromorphic hardware have emerged in recent years. One prominent example is IBM’s TrueNorth chip, which boasts a million digital neurons and 256 million synapses. Intel has also entered the fray with its Loihi research chip, designed to accelerate AI workloads while using significantly less power than traditional processors.

Other notable projects include BrainScaleS, a European initiative aiming to create brain-inspired computing systems, and the SpiNNaker project, which uses custom chips to simulate large-scale neural networks. These efforts showcase the diversity of approaches in the field, from analog designs that closely mimic biological neurons to more abstract digital implementations.

Potential Applications and Impact

The potential applications of neuromorphic computing are vast and varied. In the realm of artificial intelligence, these systems could enable more efficient and capable machine learning models, particularly for tasks involving real-time processing of sensory data. This could lead to breakthroughs in areas such as computer vision, natural language processing, and robotics.

Beyond AI, neuromorphic systems could revolutionize scientific research by enabling more accurate simulations of biological systems. This could accelerate drug discovery, enhance our understanding of brain disorders, and even aid in the development of brain-computer interfaces.

In the consumer tech space, neuromorphic chips could pave the way for more energy-efficient smartphones and IoT devices capable of complex on-device processing. Imagine a smart home system that can recognize and respond to voice commands, gestures, and environmental changes with the speed and efficiency of a human brain.

Challenges and Future Outlook

Despite its promise, neuromorphic computing faces several challenges. One of the most significant hurdles is scalability. While current neuromorphic chips can simulate thousands or even millions of neurons, they still fall far short of the human brain’s estimated 86 billion neurons and trillions of synapses.

Another challenge lies in programming these systems. Traditional software development practices don’t directly translate to neuromorphic architectures, requiring new paradigms and tools for developers to harness their full potential.

However, the field is progressing rapidly. Recent breakthroughs in materials science and nanotechnology are opening up new possibilities for creating more brain-like computing structures. For instance, researchers are exploring the use of memristors – electronic components that can “remember” the amount of charge that has flowed through them – to create more efficient and scalable neuromorphic systems.

As we look to the future, neuromorphic computing stands poised to play a crucial role in shaping the next generation of technology. While it may not entirely replace traditional computing methods, it offers a complementary approach that could unlock new frontiers in artificial intelligence, scientific research, and beyond. As we continue to push the boundaries of what’s possible in computing, the lessons we learn from the human brain may well hold the key to our technological future.